By leveraging bio-inspired vision sensors and intelligent control algorithms, a new system can perceive the environment with exceptional clarity and make real-time adjustments to ensure accurate robotic drilling operations.

Robotic drilling systems play a crucial role in various industries, including manufacturing, construction, and resource extraction. Achieving precise positioning of these drilling systems is essential for ensuring accuracy, efficiency, and safety in drilling operations. To address this challenge, researchers have been exploring advanced control techniques that can improve the positioning accuracy of robotic drilling systems.

One such technique that has shown promising results is neuromorphic vision-based control. By leveraging the principles of neuromorphic engineering and incorporating vision-based sensing capabilities, this approach offers a novel solution for enhancing the precision of robotic drilling.

A team of researchers from Khalifa University has shed light on the potential of neuromorphic vision-based control in robotic drilling systems. Abdulla Ayyad, Research Associate, Mohamad Halwani, PhD student, Dr. Rajkumar Muthusamy, Postdoctoral Fellow, Dr. Fahad Almaskari, Assistant Professor of Aerospace Engineering, and Dr. Yahya Zweiri, Associate Professor and Director of the KU Advanced Research and Innovation Center, collaborated with Dewald Swart at Strata Manufacturing to develop a neuromorphic visual controller approach for precise robotic machining. They published their work in Robotics and Computer-Integrated Manufacturing, one of the top journals across the fields of mathematics, engineering and computer science.

Fig. 1 A neuromorphic visual controller approach for precise robotic machining.

“The automation of cyber-physical manufacturing processes is a critical aspect of the fourth industrial revolution (4IR),” Ayyad says. “Between 2008 and 2018, the number of industrial robots shipped annually more than tripled, and by 2024, more than 500,000 industrial robots are expected to ship each year. The UAE specifically is aiming to become a global hub in 4IR technology and our work is aligned directly with this vision to support solutions for increased efficiency, productivity and safety.”

“The manufacturing industry is currently witnessing a paradigm shift with the unprecedented adoption of industrial robots, and machine vision is a key perception technology that enables these robots to perform precise operations in unstructured environments,” Dr. Zweiri says. “Neuromorphic vision is a recent technology with the potential to address the challenges of conventional vision with its high temporal resolution, low latency, and wide dynamic range. In this paper, and for the first time, we propose a novel neuromorphic vision-based controller for robotic machining applications to enable faster and more reliable operation, and present a complete robotic system capable of performing drilling tasks with sub-millimeter accuracy.”

Automating certain manufacturing processes means greater performance, productivity, efficacy, and safety, with drilling one of the processes prime for automation. It is a widespread process, especially in the automotive and aerospace industries, where high-precision drilling is essential as the quality of drilling is correlated with the performance and fatigue life of the end products.

Traditional automation techniques for drilling and similar machining processes depend on computer numerical control (CNC) equipment for high-precision and repeatability. However, CNC equipment is limited in functionality and workspace, and it requires substantial investment. Dr. Zweiri says industrial robots have been rising as a promising alternative to CNC equipment in recent years, due to their cost efficiency, wide range of functionality, and ability to adapt to variations in the environment.

“Despite several successful examples using robots in industrial machining applications, repeatability remains the main challenge in robotic machining: Errors originate either from the relatively low stiffness of robot joints or the imperfect positioning and localization of a workpiece relative to the robot,” Dr. Zweiri explains. “These errors can be remedied by real-time guidance and closed-loop control based on sensory feedback and metrology systems.”

In its research, the team focused on developing a comprehensive framework that combines advanced vision sensors, sophisticated algorithms, and robust control mechanisms. The integration of neuromorphic vision sensors enabled the robotic drilling systems to gather real-time visual data of the drilling environment, including the positioning of drilling targets, surface irregularities, and potential obstacles. By leveraging these visual cues, the control algorithms could make precise adjustments to the drilling system’s position, ensuring accurate target acquisition and reliable drilling performance.

Previous attempts in the literature to improve robotic manufacturing have focused on vision-based feature detection, combining cameras with laser distance sensors and other target localization techniques. But all have used conventional frame-based cameras, which suffer from latency, motion blur, low dynamic range, and poor perception in low-light conditions.

“Standard cameras also commonly have a frame rate of less than 200 frames per second,” Dr. Zweiri explains. “Computing the complex algorithm for vision processing via such hardware will take considerable time. Accelerating vision processing will greatly improve grasping efficiency.”

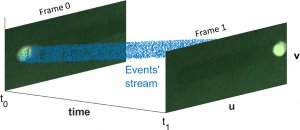

Enter the event camera. Also known as a dynamic vision sensor, an event camera is an advanced type of image sensor that operates on the principle of event-driven vision.

An event camera does not capture images using a shutter like conventional frame cameras. Instead, it is an imaging sensor that responds to local changes in brightness, with each pixel operating independently and asynchronously, reporting changes in brightness as they occur, and staying silent otherwise. Also known as neuromorphic vision sensors, they are inspired by biological systems such as fly eyes, which can sense data in parallel and asynchronously in real time.

When an individual pixel-level change in brightness is detected, it is reported as an “event,” indicating a change in the scene. Each event is time-stamped with high temporal precision, providing precise information about when the change occurred. This temporal resolution is exceptionally high, typically in the microsecond range, enabling the camera to capture fast and dynamic scenes accurately, including rapid motion and high-frequency events.

Fig. 2. Visualization of the output of neuromorphic cameras and conventional cameras.

“In comparison to traditional frame-based vision sensors, event-driven neuromorphic sensors have low latency, a high dynamic range and high temporal resolution,” Dr. Zweiri says. “Using these sensors results in a stream of events with a microsecond-level time stamp, no motion blur, low-light operation and a faster response and higher sampling rate.”

The research team says the event-based neuromorphic sensor has the potential to address the challenges of conventional machine vision, but it also introduces new challenges in developing perception and control algorithms to suit its unconventional and asynchronous output. The well-established algorithms for frame-based cameras simply won’t do.

“Here we developed a two-stage neuromorphic vision-based controller to perform a robotic drilling task with sub-millimeter level accuracy,” Dr. Zweiri says. “To our knowledge, this is the first system of its kind to employ neuromorphic vision technology for robotic machining applications. The first stage of our system uses a multi-view 3D reconstruction approach to help the robot line itself up. Then, the second stage regulates any residual errors using a novel event-based drilling hole detection algorithm.”

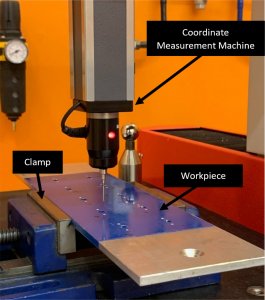

Fig. 3. Positioning errors are measured using a Coordinate Measurement Machine, where the position of each drilled hole is measured relative to its corresponding reference hole.

The neuromorphic camera in the ARIC team’s system captures the visual scene and generates a continuous stream of asynchronous events, time-stamped for high precision temporal information. This is processed using neuromorphic vision algorithms inspired by the principles of the human visual system. These algorithms extract relevant features and information from the event stream, such as edges, corners, and motion cues. The information is then used to estimate the precise position and orientation of the robotic drilling system. By analyzing the event-based data, the system can track the motion of the drilling robot and calculate its position relative to the desired target or reference point. The estimated position information is fed into the control system of the robotic drill. Based on this information, appropriate control commands are generated to adjust the position and orientation in real time, ensuring precise and accurate positioning.

The ARIC system operates in a closed-loop manner, continuously acquiring event-based visual information, estimating the position and adjusting the control commands accordingly. This closed-loop feedback enables the system to adapt and maintain precise positioning even in dynamic and changing environments.

“This work is directly applicable to the national aerospace manufacturing industry through our collaboration with Strata, and is also beneficial to other priority industries in the UAE including petrochemicals, energy, biomedical and agriculture,” Ayyad adds. “Technologies like ours will help build the UAE’s advanced manufacturing reputation, as a developer and exporter of intelligent manufacturing solutions.”

The findings of this paper, Ayyad explains, were used to develop the automated drilling robot used in the vertical fin production line at Strata, making their solution the first robot of its kind by a prominent client. “Our partnership with the Mubadala group of companies represents a unique model for joint R&D between industry and academia that provides an innovative environment for integrating science and engineering,” Ayyad says. “Our academic research is industrially relevant and economically significant, and serves as a platform for building industrial know-how for students.”

The team tested its system by drilling nutplates, a piece commonly used in aerospace manufacturing applications. Nutplates create permanent and fixed nuts that support a threaded bolt. They need to be manufactured and drilled accurately for precision application. The results showed that the team’s guidance system can precision position the robotic drill with an average positional error of just 88 micrometers. This is less than the thickness of the average sheet of paper and just a fraction larger than a grain of sand.

Fig. 4. Experimental setup for testing the proposed neuromorphic vision-based drilling method.

Their findings revealed several significant advantages of neuromorphic vision-based control for robotic drilling systems. Firstly, the use of bio-inspired vision sensors allowed the robots to capture high-resolution visual information, surpassing the capabilities of traditional vision systems. This enhanced perception facilitated improved target recognition, leading to more accurate drilling operations and reduced errors.

The real-time responsiveness of the neuromorphic vision sensors enables the robotic drilling systems to adapt swiftly to changes in the drilling environment. This dynamic adaptability is particularly valuable in scenarios where the drilling targets were not static or when unexpected obstacles emerged during the drilling process. By continuously analyzing the visual data and making rapid adjustments, the robotic drilling system is able to maintain its precision and ensure optimal drilling outcomes.

“The main challenge currently obstructing the wide adoption of industrial robots is their inability to carry out multiple tasks accurately, while ensuring the safety of the robot and its surroundings,” Ayyad says. “Today’s industries need robots operating on large workpieces across various parts of the factory, and to avoid having multiple expensive robots, they need autonomous mobility. However, this comes at the cost of higher uncertainty and possible loss of precision, which can degrade the overall manufacturing quality. To address this, our research aims to equip robots with human-like perception and learning capabilities that would enable them to adaptively change functions without requiring a team of human experts.”

Fig. 5. The robotic nutplate hole drilling setup.

The application of neuromorphic vision-based control in robotic drilling systems offers immense potential for various industries. In manufacturing, it can enable precise hole positioning for components, ensuring proper alignment and fit. In construction, it can enhance the accuracy of anchor installations, improving structural integrity. Additionally, in resource extraction, such as oil and gas drilling, this advanced control technique can enhance the efficiency of wellbore positioning, reducing costs and minimizing environmental risks.

The ARIC team’s research and development serves as a significant milestone in the development and application of neuromorphic vision-based control for robotic drilling systems. As this technology continues to evolve, it holds the promise of revolutionizing drilling operations across industries, enabling unprecedented levels of precision and efficiency.

“Building on our success with Strata in developing and deploying automated drilling robots, we are currently expanding the range of capabilities of our robotic solutions to perform additional ordinary, yet essential, manufacturing tasks such as sorting, deburring, milling and riveting,” Ayyad says. “We are also collaborating with SANAD Aerotech to develop automated robotic solutions for airplane maintenance, repair, and overhaul applications.”

That’s not all. Ayyad highlights another direction of research stemming from this work: The development of novel vision-based tactile sensing in robotic machining.

“Tactile sensing is crucial for the success of precise and sensitive machining operations to guarantee repeatability and avoid damaging delicate workpieces,” Ayyad explains. “It brings advantages in bandwidth, resolution, and cost-efficiency, compared to conventional tactile sensing approaches. Our researchers are working on developing these sensors and integrating them in different production lines in the UAE.”

Jade Sterling

Science Writer

22 May 2023